Earlier in the week, we reported on the Open Music App Collaboration Manifesto – a call to iOS music developers to push ahead with inter-app collaboration feature.

The Manifesto defines a set of best practices that should help make the user experience as great as possible for people who want run music apps in parallel. One scenario for this would be using a controller app to play a synth app, running in the background, while a drum machine plays.

Developer Rob Fielding (Mugician) has posted a fairly lengthy response that challenges developers to move beyond creating software instruments with virtual piano keyboards:

iPads and iPhones are posing a challenge to the instrument industry at this time.

iOS devices are essentially rapid prototyping devices that let you make almost anything you want out of a touchscreen, audio, MIDI, accelerometer, networking, etc combination. iOS developers are becoming the new instrument manufacturers, or are at least doing the prototypes for them at a very high turnover rate.

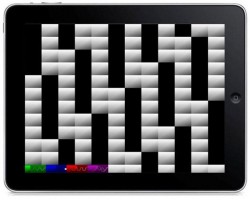

Multitouch has a unique characteristic of being tightly coupled to the dimensions of human hands. It does away with discrete keys, knobs, and sliders. If you put your hand on a screen in a relaxed position with your fingers close to each other without touching, you can move them very quickly. Every spot that a user touches should be an oval a little bit larger than a fingertip. If you make the note spots any smaller, then the instrument quickly becomes unplayable. So, lining up all notes besides each other to get more octaves does not work. The awkward stretch to reach accidentals at this small size is equally unhelpful.

A densely packed grid of squares that are about the size of a fingertip is exactly what string instrument players are used to. So, a simple row of chromatics stacked by fourths is what we want. We can play this VERY fast, and have room for many octaves. It’s guitar layout. Furthermore, the pitch handling can be much more expressive because it’s a glass surface. We know exactly where the finger is at all times, and frets are now optional, or simply smarter than they were before.

An interesting characteristic of a stack of strings tuned to fourths is that there is a LOT of symmetry in the layout. There are no odd shapes to remember that vary based on the key that is being played in.

Transposition is simply moving up or down some number of squares. This even applies to tuning. Just play a quartertone lower than you normally would and you are playing along with something that was tuned differently without actually retuning the instrument. It is a perfect isomorphic instrument, with a layout that’s already familiar to 80% of players. This is the real reason why this layout has a special affinity for the touch screen.

Fielding also shared this microtonal iPhone synth jam as an example of why he thinks iOS call for moving beyond the traditional piano approach for MIDI controllers:

http://www.youtube.com/watch?v=FUX71DAelno

Fielding goes on, in his post, to dig deep into what he sees as best practices for MIDI on iOS.

In essence, Fielding is making the case against ‘horseless carriage thinking’ on the iOS music platform.

Horseless carriage thinking is the idea that our natural tendency for new technologies is to try to make them conform to old technologies, which is often counter-productive. Early automobiles were designed to be horseless carriages, which looks comical in retrospect.

Are virtual piano keyboards the best interface for playing tablet-based instruments? Fieldings argues no – and that designers need to get creative and find user interfaces that are natural fits for multi-touch devices and their capabilities.

Interesting stuff – and it reflects our initial impressions of the iPad as a music platform, when the device was first introduced:

The iPad won’t replace the power of a dedicated music computer – but it is creating a new platform that will support new types of mobile music making and new ways of controlling and playing music.

While a lot of great music apps have been done for iOS and other tablet computers, few apps have deeply explored the capabilities inherent in tablet computing for creating something new. This is probably one of the reasons for some of the anti-iPad backlash; a piano MIDI controller is always going to be a hell of a lot more playable than a virtual piano.

Give Fielding’s thoughts on MIDI Instruments on iOS a look – and let us know if you think it’s time for tablet music app developers to get more creative with their user interfaces.

Yeah, I think it's time to move on from the piano keyboard interface on most apps, if not midi controllers too. It's a historical convenience that's run it's course in light of modern design.

BTW, can't wait for Pythagoras!

Just awesome! The best instrument in terms of playability i know is a Mugician. Maybe i think this way because i played guital for long years before synths, but it is really more convinient than any other input interfaces! It is most natural way to play on multitouch surface. Damn, i want this kind of MIDI controller, or maybe a synth with traditional knobs, like in iMS-20, but with a keyboard like Mugician have.

the iPad drops time over midi or wifi. For me to often to trust it in a live situation which is where I want to use it the most. At the moment I dont trust it. The only good app so far for the iPad is ielectribe and even the tempo goes mad on that when connected via midi.

And please no more all in one studios and synths I have loads of these apps already and all of no use what so ever. SAVE YOUR MONEY AVOID BUYING AN iPad for making music, at the moment

Is the latency a real problem? I've not used iOS apps that much, but i've never experienced any noticeable latency when connecting my iphone to MacBook pro (i've used little midi machine via CoreMIDI and Haplome and TouchOSC via OSC). I created ad-hoc wifi network with MBP, and as said, never experienced any latency. So could it be problem with routers or operating system (never tried in windows) instead?

There is a huge difference between the latency problems caused by knobs and sliders being late, and notedown/noteup being late. For drum machines and sequencers, you can batch data and send out predictively so that you are on time. But if you are playing an instrument like piano,guitar, etc… you will end up throwing it on the ground in disgust if you are playing arppegios, scales, and bends in 16th notes… Midi over Wifi does not, and cannot work; though some people get better results on average depending on their network.

The MIDI standard was designed with a cabling and signaling design in mind that will meet the timing guarantees, and this part of the standard is being tossed when you go to Wifi.

Thanks for mentioning Little MIDI!

As Rob mentions, sequencers have it easier. I send the notes early with Little MIDI Machine specifically to make it work well over WiFi. I spent a lot of time listening to badly timed sequences to figure out how early I had to send things to get it sounding solid. You'll notice if you change the value of a slider or mute button right before the sequence step LED hits it, the change to the sequence might not be heard until the next go round in the loop. That's because there's 150ms or so of latency built in, and that note was already sent to CoreMIDI.

Real time controls don't have that luxury, they can't predict when your finger is going to touch the screen!

Unsolicited feature request for Little MIDI – the ability to transpose both sequences via MIDI input.

I'm not experiencing any latency problems in my home wifi network, but I only have the iPad using wifi, the computer is wired. Wifi can be problematic due to interference and conjection and the quality of your wifi devices, but if you minimize these, midi works through wifi.