Bit Shape has introduced TC-Data, a MIDI and OSC (Open Sound Control) controller app that sends messages to other apps and hardware.

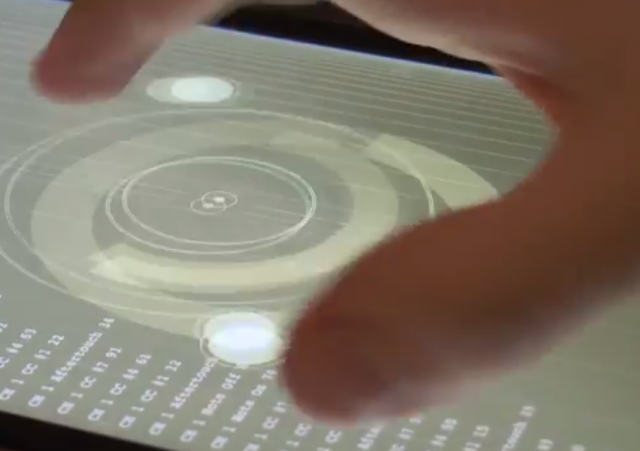

TC-Data is a new MIDI/OSC controller which, instead of trying to recreate existing piano-style controllers, explores the possibilities of creating an interface specifically for multi-touch screens.

TC-Data takes the multi-touch controllers from the TC-11 Synthesizer and opens them up to any external software.

The app has over 300 multi-touch and device motion controllers and triggers which can be assigned to any MIDI or OSC message output. TC-Data is not a synthesizer, but it is designed to control them, plus any other MIDI or OSC software.

Here’s the official video intro to TC-Data:

Features:

- Inter-app control via CoreMIDI

- OSC over Wi-Fi

- Support for external MIDI interfaces

- MIDI input passthrough

- Virtual MIDI output

- MIDI over Bluetooth via 3rd party apps

- Fully assignable touch controllers

- Gyroscope and accelerometer motion control

- 300+ controllers and triggers

TC-Data is available now for US $19.99 in the App Store.

very cool concept but thought the demo was total garbage. nothing more than pointless accidental twiddling.

would love to see more demos of precision usage instead of “wow look how many / fast you can go!!!”

seems vapid that way, when i bet the app isnt

it’s all a matter of usage, their synth is by far one of the best anywhere mostly because of the control scheme so i can only imagine this app used well will be amazing.

bad demo – pointless accidental twiddling.

the app is good

but they did not show this in the demo

I only know TC-11. And if it’s anything like it, it’s well worth the money.

i dunno it’s a controller. it’s down to you and your imagination. if you can go fast why couldn’t you be precise?

It seems like we’ve seen this tracking multiple points on the touchscreen before. That doesn’t make this any less (or more) cool.

Makes me wonder at what point they’ll stop with all this glass rubbing and just mount a couple or three cameras that will just track your hand movements in space (not unlike Leap Motion, but with greater range– (and less CPU hogging).

> Makes me wonder at what point they’ll stop with all this glass rubbing

> and just mount a couple or three cameras that will just track your hand movements in space

Perhaps like this? https://www.youtube.com/watch?v=wTOlenq5sT0

I’ve open-sourced the controller-half of the Space Palette software, which you can train on any flat surface with holes of any number or shape, each hole becoming a three-dimensional mousepad in mid-air that sends 3D cursor data in TUIO/OSC format, see http://multimultitouchtouch.com

That is SUPER cool! A daringly “think big” design! Thanks for sharing.

yeah, core concept seems really awesome, but demo video is garbage, dudes twiddling around on a pad that doesnt even have any cables?

Isis, the Ipad does not need cables to control midi, it uses coremidi over wifi, or ad hock network. I do agree with the random twiddling. I have been playing with TC-data for a couple hours and there are much better example uses that could have been applied in the video.

i like the part in the video where the young woman use this app to control a hardware while also playing its keys. Been looking for a way to turn any synth into a Bebot-style controller.

I’m still looking for the way to network it for input into Logic. I’m probably missing something glaringly obvious, but I’ve been scouring the manual you can download and coming up with nothing.

@stephen

first on your ipad under settings Wi-Fi connect your ipad to your computers wifi network or same network as computer (if you do not have wifi you can create a network from your computer)

next launch tc-data

on your mac (assuming because you have logic)

go to your applications folder,

find the utilities folder

in utilities find the audio midi setup application (its a little keyboard)

open it, it will only open the audio window

go to window, show window midi

double click the “NETWORK” icon

under my sessions click the +

it will create “session 1”

your ipad should show up under directory

click it, then click connect

crap didn’t work, can’t even get it running via Reaktor

I havn’t got this so can’t help on that front, but I use Konkreet Performer with Logic and Reaktor. This looks like a much more powerful version of that, with the ability to build your own interfaces. The problem often is though, if the configuration is nigh on impossible to figure out or get to work, then it’s not so good.

i’ve wanted to use kontakt more, but it’s a very confusing interface to me. what do you use it for in logic?

konkreet, not kontakt, sorry…

Completely wanky examples, but this is this direction that will bear fruit for the next generation of “interface standards”. Some methods will emerge as useful, others won’t, and we will further refine the winners.

I would like to see someone make some effective demos that would bridge the gaps so more people could see the possibilities. For example, a simple set up that would let each finger touch replace an analog-emulating fader, allowing the hand to move in a more natural open/close motion but yielding the same multi-fader control result. Any sized hand could touch the screen at any point and move the faders from their current position with as much granularity as they desired. This is much more precision control, and adaptability to different users, than a fixed grid of faders spaced for the mythical “average hand” and traveling only in the Y axis. Add some quick select options on the side or top for picking which five (or ten!) faders you want out of a larger group, and you have a very fast and effective mixing surface that doesn’t need to cost thousands of dollars and take up a huge desk space. And every bit of it can be done with eating app tech right now.

Hey, that is a wonderful idea! Hope someone develops it. Would be very useful.

you can do something similar to the “average hand” idea

I’m working on getting this to run via midi into logic/reaktor, then I’ll make some demos